Living in the Bay Area, I sometimes rub elbows with people who are friendly, thoughtful and very intelligent, but who seem to live in a different dimension. I mean the workers who are busy digging in the Uncanny Valley, looking for gold.

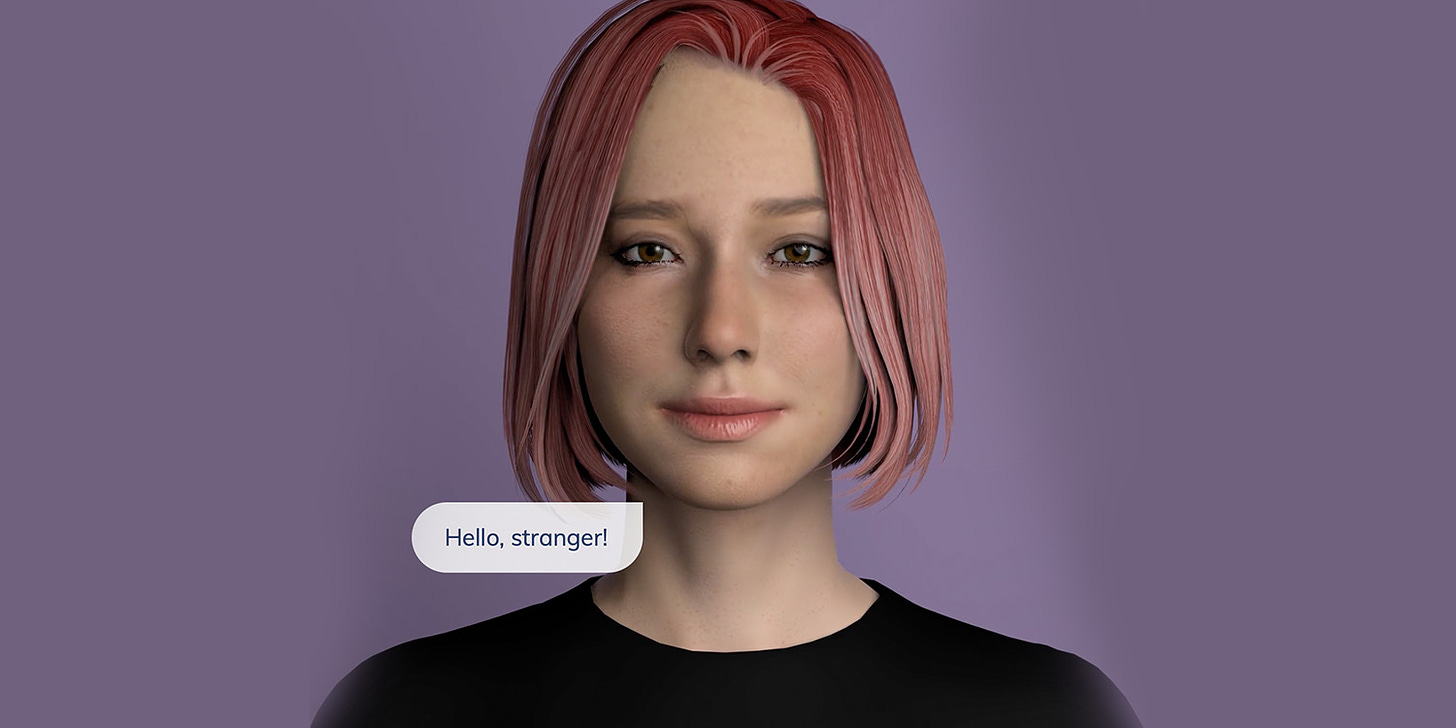

On Tuesday I participated in a panel discussion on AI. It was a small event in San Francisco, put on by some venture capitalists and academics from Harvard. There were people from OpenAI and Tinder, among other firms, as well as the CEO of Replika, which peddles AI “companions” and has 30 million users. You can design your own Replika — someone who is “Always here to listen and talk. Always on your side.”

Asked what metaphor comes to mind when thinking about AI, I offered two:

In the Hebrew tradition, a golem is a crude anthropomorphic creature made of clay or mud, brought to life by ritual incantations. In the Mishnah, the word “golem” refers to someone who is unsophisticated; in modern Hebrew it simply means dumb. “If commanded to perform a task, a golem will perform the instructions literally. In many depictions, golems are inherently perfectly obedient,” according to Wikipedia.

Also from Wikipedia: In the world of the occult, an egregor is an independent, artificial being that arises from a collective mind. It may be created deliberately, as an “efficacious magical ally, but the egregor’s help comes at a price, since its creators must thenceforth meet its unlimited appetite for future devotion."

I went on to deliver a much-condensed version of my post “AI as self-erasure.” My point was to consider how use of chatbots is likely to affect human subjectivity, and considered the case of a man who outsources the composition of a toast for him to deliver at his daughter’s wedding to chatGPT. To do this would be to not show up for her wedding, in some sense that seems important. We are the language animal, so LLMs are aimed right at our essence, as Talbot Brewer put it in a lecture on “degenerative AI” several months ago in Charlottesville.

I think it is safe to say I won’t be getting any funding from the VCs.

The wisest person in attendance was Sherry Turkle, and the most interesting exchange was between her and the founder-CEO of Replika, a woman named Eugenia Kuyda. Turkle pointed out that apologetics for tech often come in the form of a slippery slope that begins by invoking some disability or another. She told a story from her own research (she interviews people about their use of various technologies) of a family who got an AI robot dog for grandma. They got the robot because grandma is allergic to dogs, and wouldn’t it be nice for grandma to have companionship? It’s better than nothing! When Turkle revisited the family a few months later, they’d gotten rid of their own dog and replaced it with a robot. She says the usual trajectory is from “Better than nothing” to “better than anything,” where “better” means without friction between self and world. The real is displaced by the fake.

When people begin using AI companions, they have no illusions that they are talking to a real person. But something funny happens along the way, and users invest themselves in the relationship as though it were real, leading to a kind of split in the person — living apart from reality as his rational mind knows it to be. The reflexive character of chatbots (they learn and adapt to you) makes them especially powerful generators and sustainers of fantasy.

Turkle made the point that AI companions aren’t qualified to converse with you about life’s challenges because they don’t have standing to do so: they haven’t lived a life. They haven’t suffered loss or fell in love or struggled with parenthood. I thought this was a nice point.

Kuyda, the Replika founder, is alert to humanistic critiques of AI companions such as Turkle offers, and seems to take them seriously, but is apparently not ready to allow them to interrupt the great march forward. To be fair, she strongly endorsed two limiting principles: not offering Replika to children (that would be an unregulated social experiment on developing minds), and not tying the firm’s revenue to “engagement” (which is Valley speak for addiction-by-design). These are both very important restraints, as far as they go. Or would be, if they could be sustained against the demands of investors for maximum returns.

This is really great. Thanks for this perspective. Two things spring immediately to mind. First, there is something deeply spellbinding for human beings about machines which use language. This was first observed in the sixties but LLMs are like a nuclear bomb in this regard. Second, there is something about the anthropomorphic language that has taken hold with AI that jams people's ability to think cogently about it. Full disclosure: my work is in building software tools to diagnose and optimize software performance on the very machines that run the largest models. Those same kinds of machines are used around the world for computational science, but have found a lucrative home in AI. So I find myself working as an unexpected enabler of some of this. Alas.

It's hard to see anything good coming from the kind of "split", as you call it, in which a person operates as if things that are essentially false (a robot dog is not a dog, an AI girlfriend is not a woman) are nevertheless true. But it also strikes me that a determination to operate within a false reality is increasingly characteristic of our time, and holds striking similarities to such recent phenomena as, say, transgenderism, or the determination to live during covid as if we had learned nothing about natural immunity.

"Light came into the world", the apostle John said, "but men loved darkness."

https://www.keithlowery.com/p/is-ai-demonic

I recently came across this first person essay in the CBC. I read it as I walked to teach my class of first year university students and shared parts of it with them as we settled into class. Hearteningly, they were amused and horrified. The young want real life (and most of them presumably want to have sex with a real person who has a real body). But, on the other hand, most of them feel little compunction about getting LLM to write all or portions of their essays. (I have had to go back to the dark ages of in-class pencil and paper essays in an effort to remove this temptation from them.) What concerns me is that they don't seem make the connection between not showing up in their thinking and not showing up in their personal lives. They all want to be *unique individuals* but somehow fail to grasp that this happens through a real engagement with their mind through language.

I've copied the CBC essay here. The guy writing it seems pretty normal, which is why this seems particularly sad.

https://www.cbc.ca/radio/nowornever/first-person-ai-love-1.7205538